When you think of AI in terms of products or apps that you and I can use off-the-shelf, there are currently at least four of them:

LLMs like ChatGPT, DeepSeek or Claude, which are best described as smart interns that you can spar with or that can help break down problems. Lots of people have written lots on them - on their capabilities (smart problem solving for 20 USD per month), but also their limits (getting things wrong, making superficial connections, not really deeply thinking, weird wording). I love working with LLMs.

Copilots, such as Microsoft M365 Copilot or the new AI-powered Siri Intelligence on the latest generation of Apple hardware. They summarize emails, help improve grammar and style, help with complex request like picking the right Excel formula - and are baked into existing software. So far, they have had a pretty mixed record, I would say. Value in a business is created by launching products, creating things, improving processes, fixing things, lowering costs. It is not created by helping staff write emails slightly faster. To describe something of a horror scenario for me: Copilots effectively help one group of staff write longer, smarter emails, while they help another group of staff summarize these emails.

There are a few first AI research tools for normal users, e.g., Google NotebookLM or Deep Research from OpenAI. Both read through research material or journals, and can generate studies, outlines, learning material or even a podcast (in the case of NotebookLM). If your job involves doing research, reviewing studies, etc. these are probably very useful tools - and you will likely be using them already. If not, you may not even have heard of them - and they may not impact you at all. They are what Benedict Evans calls "an amazing demo, until [they] break."

AI-powered search engines. Perplexity.ai is the only mature one so far (in my view), and for some use cases like health, sports, nutrition it has replaced Google for me. I love this AI use case - and the user experience is so similar to using Google that I can see Perplexity taking over a lot of market share from Google.

These four categories represent today's AI landscape—tools you could incorporate into your workflow immediately. But there's a fifth category emerging that could fundamentally reshape how we think about AI: autonomous agents.

AI agents

An agent represents something fundamentally different: an AI application that can think and act with relative independence. The concept remains somewhat nebulous, with no market-ready examples, but the potential impact on businesses and individuals is rather promising. So let's look at agents.

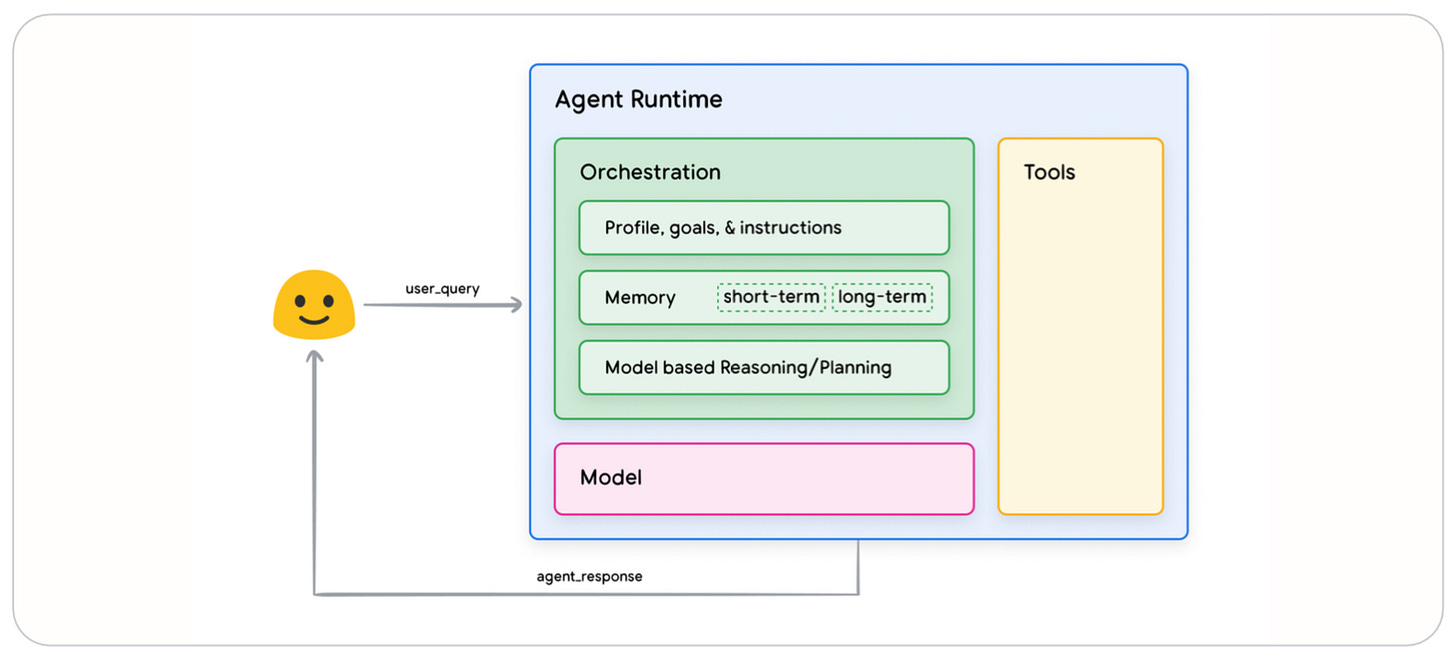

At its core, an agent combines an LLM's reasoning capabilities with several critical extensions: access to real-world data (internet, company databases, research databases), the ability to independently retrieve information it deems relevant, persistent memory of completed tasks, and operation under a framework of goals and instructions with human oversight. These agents operate in cycles — gathering information, processing it, exploring alternatives, collecting additional data, and making incremental decisions until reaching their objectives.

So how does an agent work in practice - and fit into our lives? There are probably two frameworks to conceptualize agents in super practical terms:

Agents as colleagues that are (so far) a good bit dumber and less reliable than the average human, or…

Agents as a totally different animal that operate themselves, with some human intervention once they get stuck.

Depending on your chosen framework, the potential impact and adoption of agents could play out rather differently.

Framework #1: Agents as colleagues

If you take this perspective, employing agents is rather similar to an offshoring of functions: Western companies had for some 15-20 years already the option to set up low cost service centers in 2nd and 3rd world countries - and most already transferred all suitable roles and tasks, i.e., those that are rather predictable, that have a specific process and clear success KPIs. Costs can be as low as a few hundred USD per staff per month, which is significantly below North American or European costs. Offshoring has probably increased shareholder value for a lot of companies and created lots of jobs in 2nd world countries, but it did not unlock a golden era of productivity (unlike what AI promises).

Using AI agents or having service centers is not too dissimilar on a high level - you move certain tasks over to new colleagues. There are however a few meaningful differences that make using agents potentially more attractive to companies. You can start small with AI agents, e.g., have just a few of them running, and don't need hundreds or thousands of them. The lower threshold to use agents should allow much more and much smaller businesses to offshore to agents. Also, you can have a mixed team of humans and AI agents, working closely with each other. This is not that feasible with offshoring - you cannot really have one team member in Europe/North America and one in India or the Philippines and expect them to work efficiently as a full team, e.g., due to different time zones. Also, costs for AI agents are much lower - and you have no constant training needs or high turnover rates. All these factors should make it easier for many more companies to offshore functions or tasks to agents, which would never have considered offshoring to countries with lower labor costs.

The key obstacle I see to rolling out AI agents at scale in a corporate environment is that it's very difficult to get managers or team leads to introduce these agents themselves. They may not know which roles are better suited for agents and which for humans. They need to write SOPs and process manuals, which requires a lot of time and self-awareness (which is of short supply). Yes, you can hire consultants to help you here with introducing agents, but this is pricey, and they may get it wrong too. I'm skeptical that these hybrid human-agent teams will automatically generate cost savings or efficiency improvements at scale. Managers and staff may be opposed to these changes for good or not so good reasons, e.g., as they create friction or more work in the ramp up period, take decision power away from humans, require more structured processes and decision-making, or may generally just create visceral discomfort with real human. Also, working with agents requires new skill sets that managers need to build, e.g., supervise agents, adjust goals, tweak models, document processes and SOPs - rather than just performance manage a human. Across the board, working with agents requires staff to have a nuanced self-awareness of their style of work, own preferences, the limits of their knowledge and skills - and my experience is that these levels of self-awareness are rather low with most of us.

Framework #2: Agents as a totally different animal

Perhaps comparing agents to human colleagues fundamentally misses the point. Agents are so different that we may not be constrained by having to fit them into a human organization, working with existing processes and existing staff. Let's keep in mind: organizations are man-made, and have grown over time. If we take the example of accounting, this is a man-made definition of roles. We probably started off realizing we need somebody who supervises the books of a firm - knowing how much you owe to whom is rather critical. It makes sense that this team also tracks, checks and credits/pays invoices we receive and get. And they should also do reporting around the overall balance sheet. We decided to call this “accounting” - and probably every company has now set up a team that does that. But with agents you can choose a different footprint - e.g., have one agent cover pricing with invoice creation with invoice checking. This is two or three totally different teams in many companies, but only because it draws on different human skill sets in each role. For agents, it could well be one.

If you wanted to introduce agents on a bigger scale, you could therefore totally redesign your processes (or better: use AI to redesign them) and find the optimal allocation of roles to agents, which may look totally different from what you have today. That could be a huge game changer, as that could allow faster, smarter and eventually better decisions. Staff can focus on value creation, rather than admin work. Let's keep in mind that man-made organizations are not perfect, efficient clockworks of efficiency and rational decision making. Value creation is not evenly distributed across all staff - stealing from Mr Pareto, let's assume that 20% of staff probably create 80% of overall value. Or drawing on the o-ring model, the least efficient parts of a company define the overall throughput and value creation. So even if you speed up some parts of the decision making with AI, or create better quality output, this may not trickle down. However, if you let agents run processes more end-to-end, that could overcome some of these bottlenecks (while of course creating new issues, like intransparent decision making or lack of oversight).

We may be a few months or potentially years away from agents being deployed this way, but it's worth thinking about it now. I can see this work for small enterprises or younger start-ups, that are more flexible, may not have formalized processes completely and may have staff that can jump between processes and roles. In particular, I can see agents working wonders for freelancers or very small companies. There are no legacy processes, there is super clear ownership and accountability (you!), and you can design your much simpler processes from scratch or throw existing workflows or tools overboard quite easily.

However, for larger firms it will probably take too long or be too risky to try altogether. Even just improving existing processes often requires external help (e.g., lean coaches or process consultants on the lower end of the price spectrum, or strategy consultants on the higher end), or an internal process excellence team. Completely redesigning is probably a bit too much for many established companies. I am not saying it cannot happen, but it will take time and effort.

Looking ahead

Oh yeah, we're entering an exciting phase in AI's development where agents represent something fundamentally different from the current tools we've grown accustomed to. Unlike LLMs or copilots that respond to our prompts, agents could reshape how work gets done. The potential is substantial, but we're still in early days. Agents will require some really thoughtful integration, careful supervision, and likely significant process redesign to deliver their full value.

As always, I'm interested in your thoughts: Feel free to message me. Or, if you prefer, you can share your feedback anonymously via this form.

All opinions are my own. Please be mindful of your company's rules for AI tools and use good judgment when dealing with sensitive data.